Category: Hardware

Making Robot Friends with RemoTV

Years ago at Maker Fair I saw a person followed by a rather impressive robot that seemed to have a mind of it’s own. Later I found out that the robot was being controlled by people through the internet using a site called LetsRobot. While that project is no more, that same person, Jillian Ogle, that I met is the creator of it’s successor RemoTV.

Continue reading Making Robot Friends with RemoTVTime to grow some data…

Last weekend I decided to build a monitoring system for the planter boxes on my deck. I wanted to collect data on soil moisture and light levels from at least two of the 4 planter boxes. The issue is that I don’t have a place to plug it all into and I want to log the data using Google Sheets so that I can graph everything.

So I grabbed a solar panel, whipped up a voltage regulator, connected a rechargeable battery and that took care of the battery. For the microcontroller I used a Particle Photon, mostly due to it’s abundance of analog read pins, and connected a Spark Fun soil sensor with an added photo-resister for light readings. The Photon reads the analog value of each sensor and adds a new row into my Google Sheets doc via IFTTT. After the data is sent the Photon goes into deep sleep mode every 10 minutes. This makes it so that the sensor sips power and can easily last using only solar power.

So here is the Data:

My next step is to collect enough data to flag events that I want to be notified about. Like when the water level gets low enough to were I should water my plants, or when my plats are about to die due to extreme dryness. I can also see how much daylight a particular box is getting so I know what plants need to go where. If I had access to a water outlet I could have that automatically water the garden, but for now my water bucket works well enough.

New RetroPi controller

So this is happening tonight…

So this is happening tonight…

It is using an Adafruit 32u4 Feather microcontroller with a program that sends keyboard commands over USB. It works quite well and I have plenty of pins left for more stuff.

Another late night at the shop.

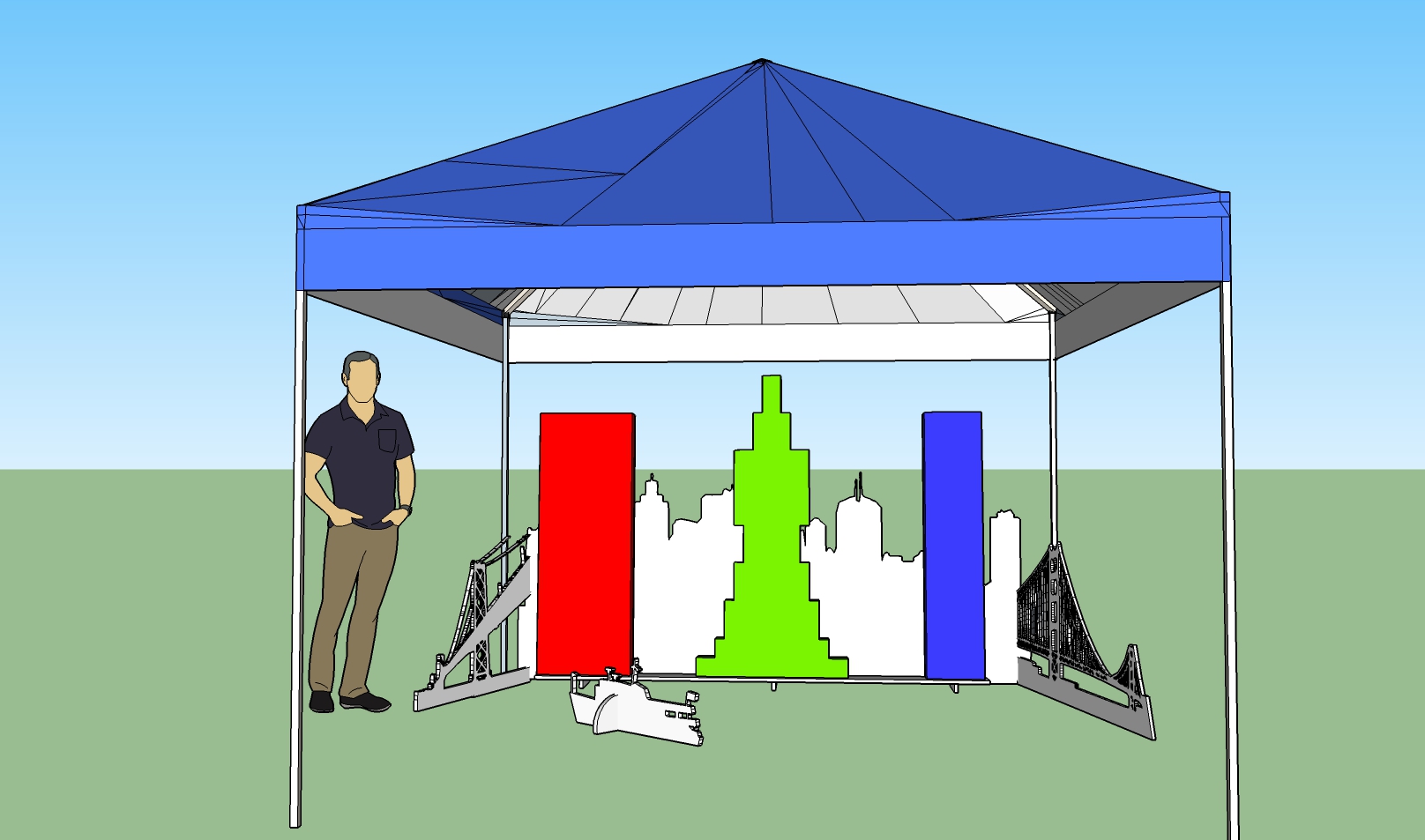

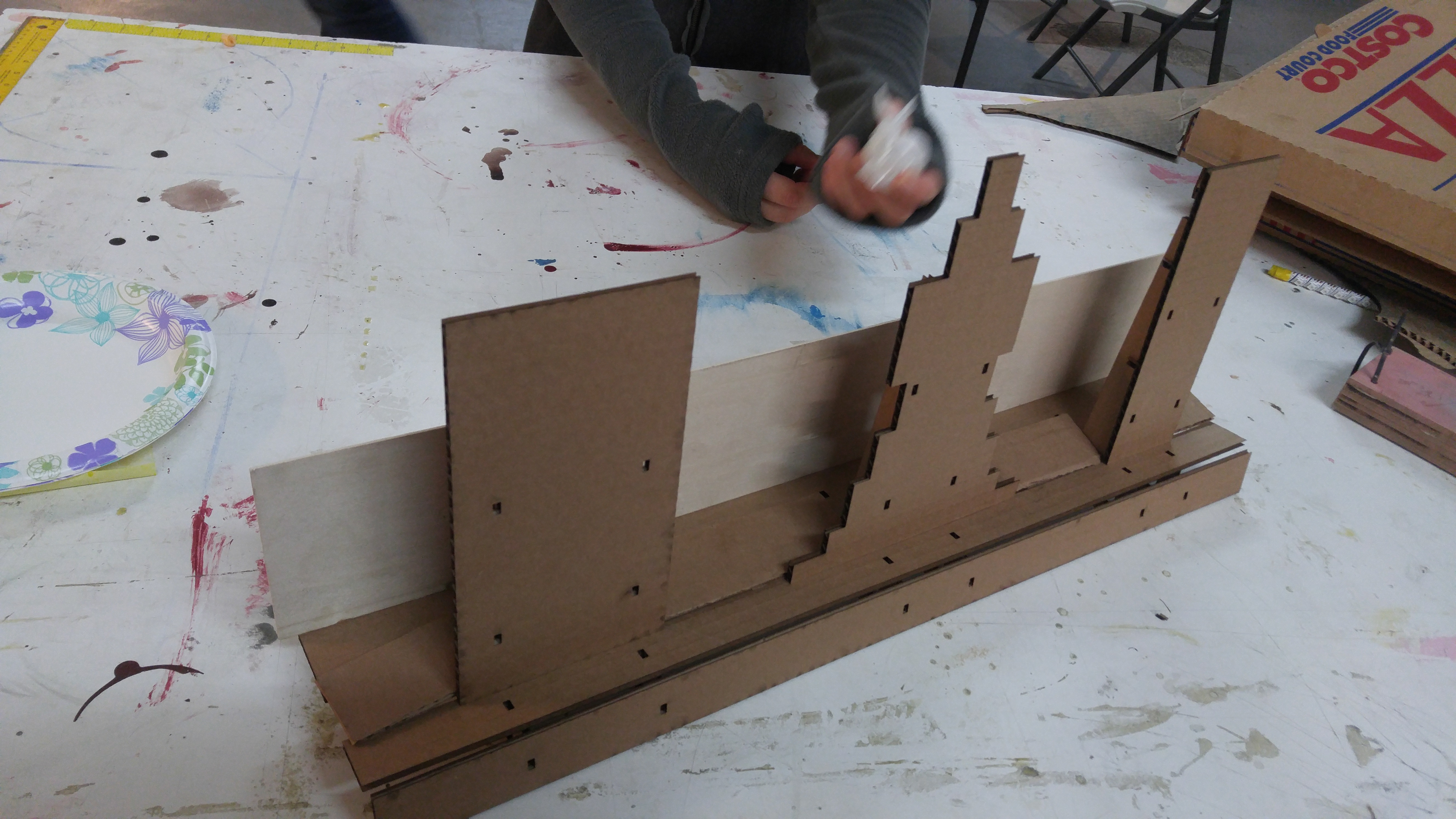

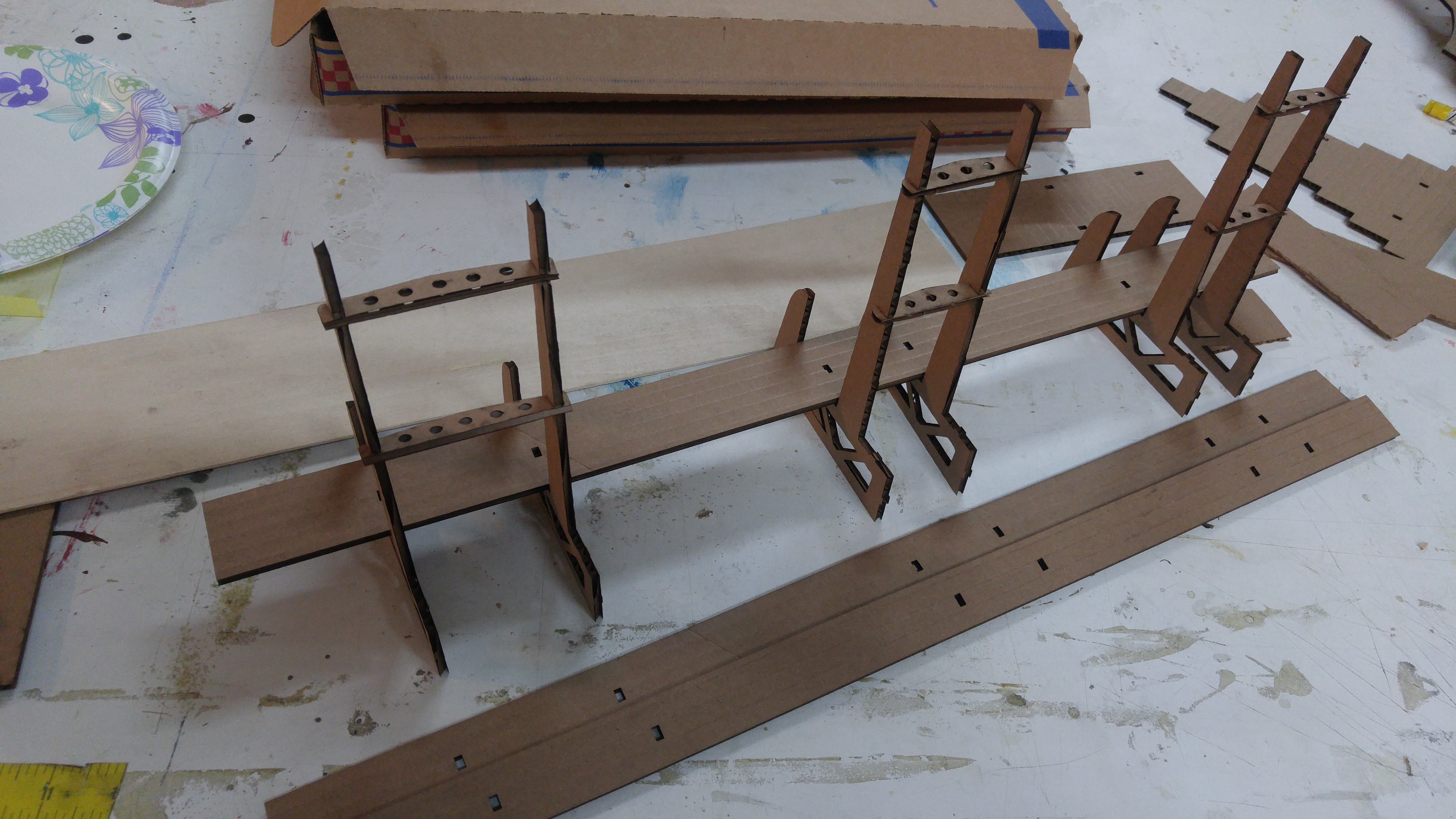

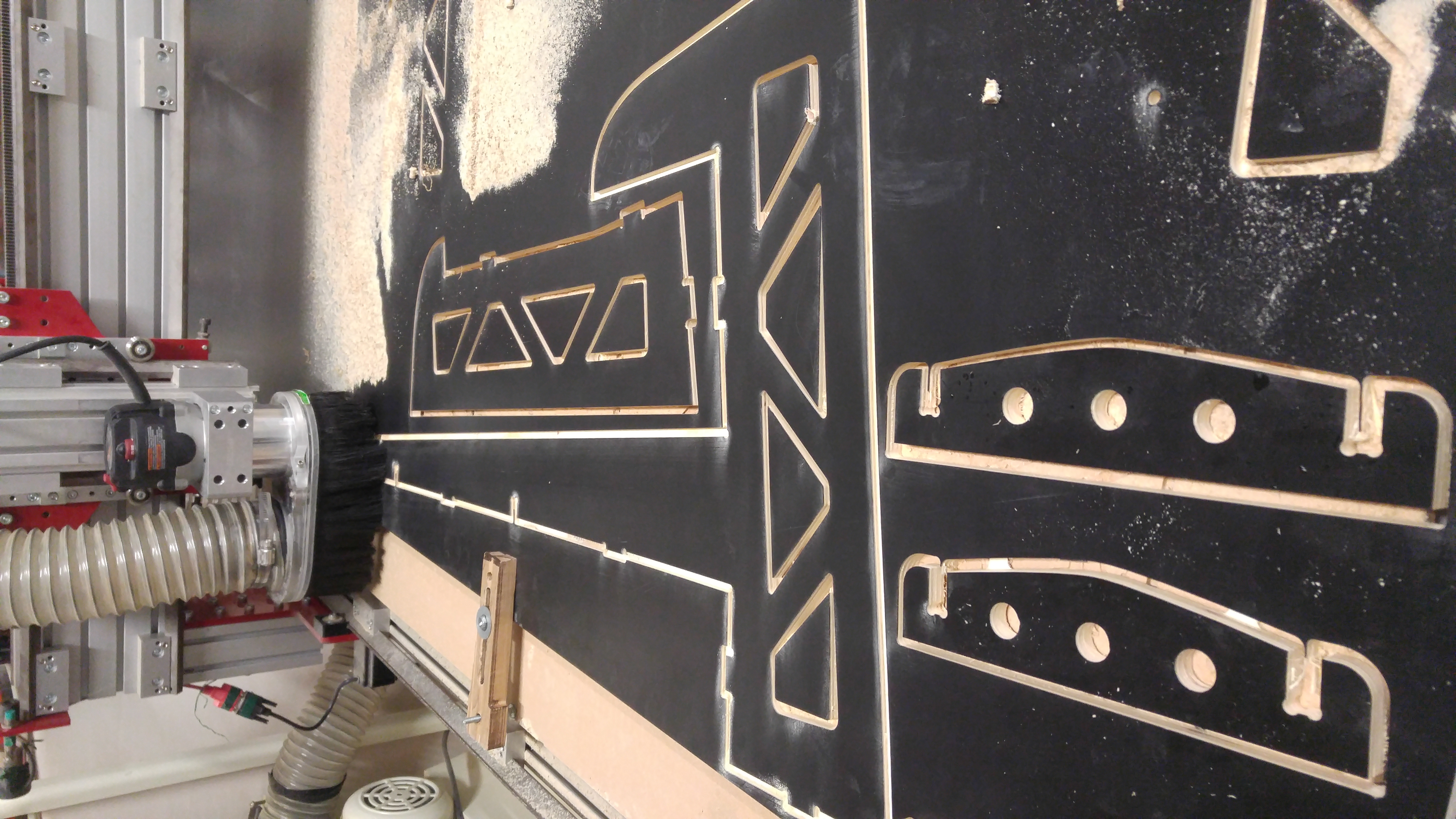

I have been helping out with a project that will be featured at the Bay Area Maker Faire. This will be my first time there and I am super excited about it, but the deadline is tight. So yesterday I designed the structure for the display, prototyped it in cardbourd, then cut out all of the main supports on the CNC. I was there until 2am, but the parts look amazing and the fit is tight and solid.

The chalenges for this is that it all has to be flat packed for shipping, assembel quickly, and survive many potential shipments. So this is my solution.

Tomorrow I should have the basic structure built and will start installing electronics.

Micro:Bit Servo Robot

I have been working on trying to get my servo powered robot, Hack-e-Bot, working with the MicroBit so that younger kids can program it’s basic movement to navigate through an obstacle course. I now have custom blocks that can be used to control any servo powered robot using Pin 0 and Pin 1. Continue reading Micro:Bit Servo Robot